Machine Learning

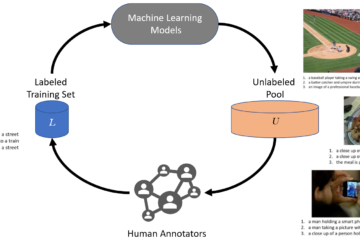

Active Learning for Medical

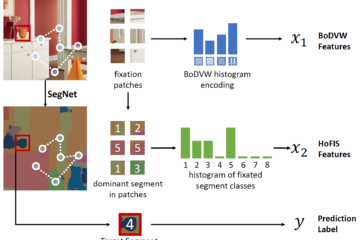

Image Segmentation

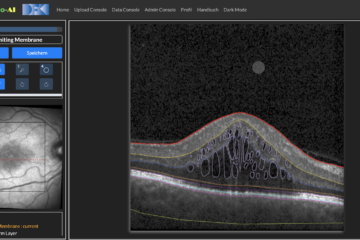

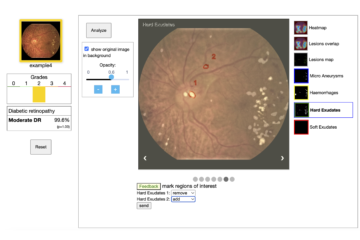

Ophthalmo-AI Age-related macular degeneration (AMD) is the primary cause of severe vision loss in the western world. One cause is the retinal accumulation of harmful byproducts of metabolism processes occurring in the eye. Treatment aims at slowing down disease progression and is performed through the injection of anti-VEGF agents into Read more…