Visual Search Target Inference in Natural Interaction Settings with Machine Learning

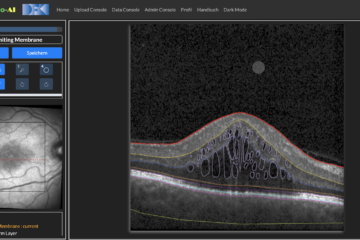

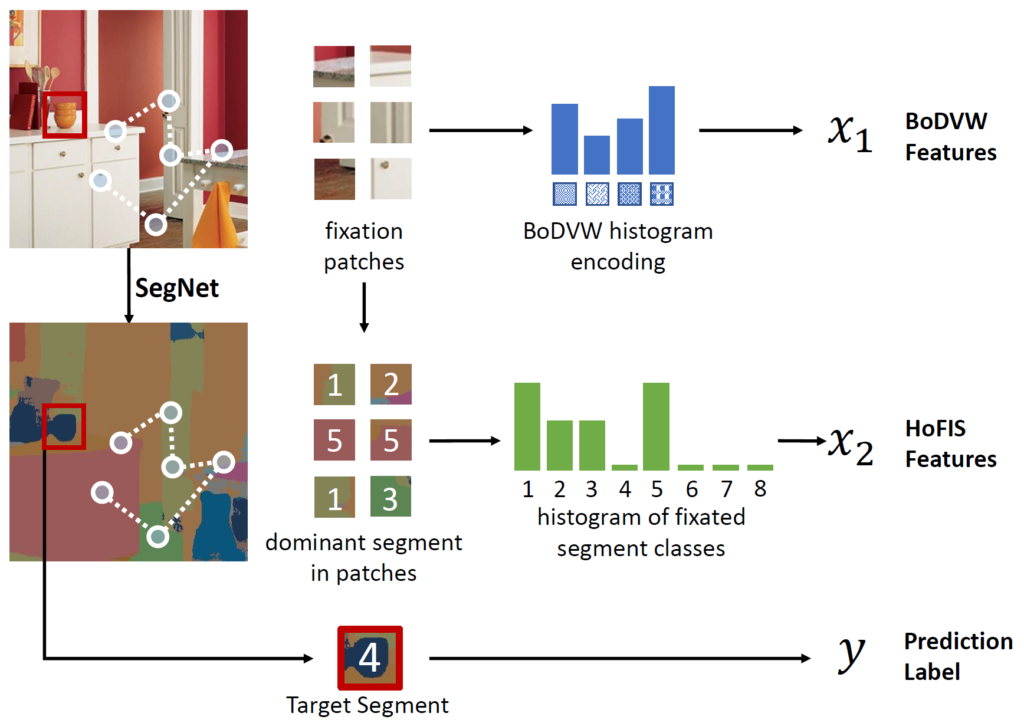

Visual search is a perceptual task in which humans aim at identifying a search target object such as a traffic sign among other objects. Search target inference subsumes computational methods for predicting this target by tracking and analyzing overt behavioral cues of that person, e.g., the human gaze and fixated visual stimuli. In [Barz et al., 2020] we present a generic approach to inferring search targets in natural scenes by predicting the class of the surrounding image segment. Our method encodes visual search sequences as histograms of fixated segment classes determined by SegNet, a deep learning image segmentation model for natural scenes. We compare our sequence encoding and model training (SVM) to a recent baseline from the literature for predicting the target segment. Also, we use a new search target inference dataset.

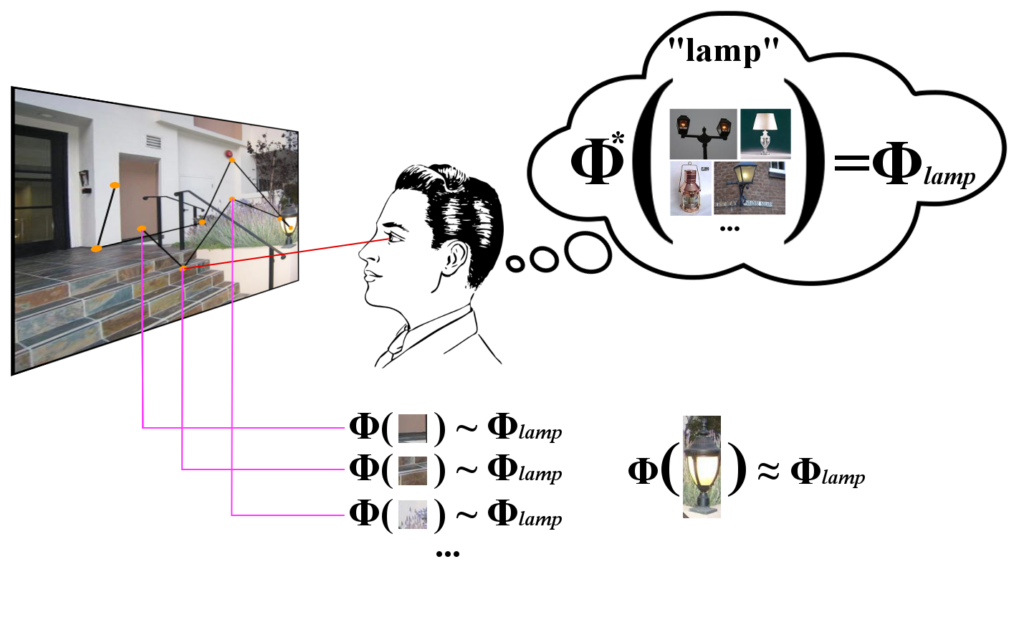

Visual Search Target Inference Using Bag of Deep Visual Words

In our work [Stauden et al., 2018], we implement a new feature encoding, the Bag of Deep Visual Words, for search target inference using a pre-trained convolutional neural network (CNN). Our work is based on a recent approach from the literature that uses Bag of Visual Words, common in computer vision applications. We evaluate our method using a gold standard dataset. The results show that our new feature encoding outperforms the baseline from the literature, in particular, when excluding fixations on the target. We presented this work at the 41st German conference on Artificial Intelligence.

References

- Visual Search Target Inference Using Bag of Deep Visual Words. In: Trollmann, Frank; Turhan, Anni-Yasmin (Ed.): KI 2018: Advances in Artificial Intelligence - 41st German Conference on AI, Springer, 2018.

- Visual Search Target Inference in Natural Interaction Settings with Machine Learning. In: Bulling, Andreas; Huckauf, Anke; Jain, Eakta; Radach, Ralph; Weiskopf, Daniel (Ed.): ACM Symposium on Eye Tracking Research and Applications, Association for Computing Machinery, 2020.

Dataset

We provide our extensions of the used datasets for better reproducibility of our results. The documentation with annotations (without images) can be found on GitHub: https://github.com/DFKI-Interactive-Machine-Learning/STI-Dataset

You can also download the whole dataset with images: