Learning through Human-Robot Dialogues

Today, robots are present in our lives and they will be even more ubiquitous in the future. This advance of robotic technologies also poses the question how humans can guide the behavior of a robot and, in particular, how non-specialists can advise robots to learn new tasks. Different research areas are concerned with this topic including, but not limited to, learning from demonstration, observational learning, interactive task learning and grounded language learning. We are interested in investigating multimodal techniques across the different topics. A particular focus lies on eye tracking in combination with speech-based interaction.

Our Prototype at HRI 2017

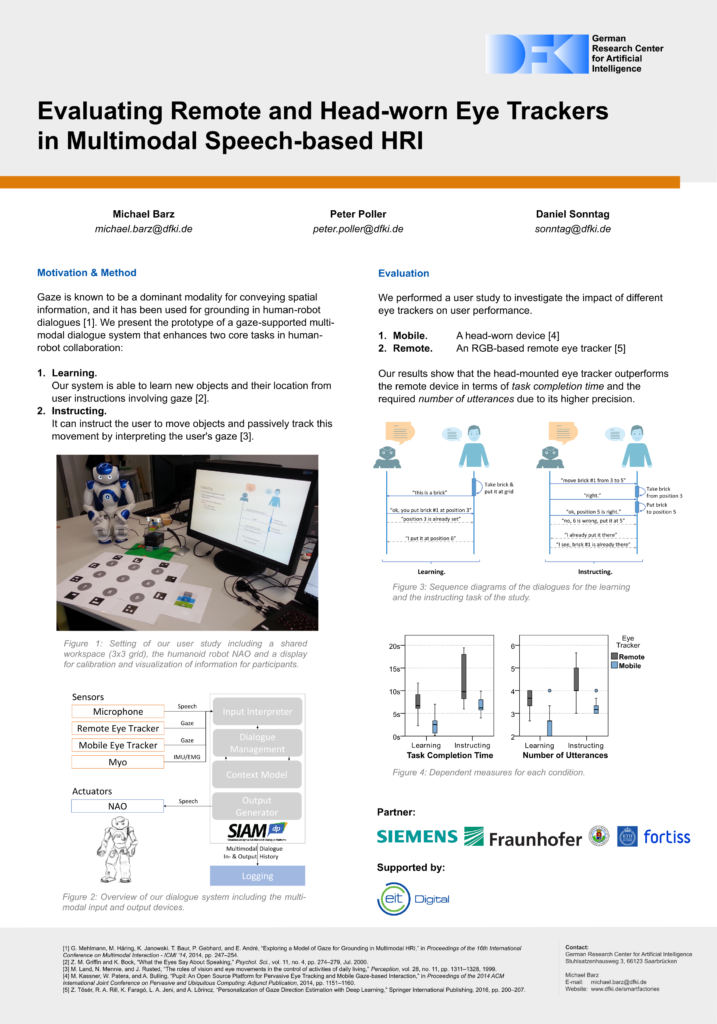

Gaze is known to be a dominant modality for conveying spatial information, and it has been used for grounding in human-robot dialogues. In this work, we present the prototype of a gaze-supported multi-modal dialogue system that enhances two core tasks in human-robot collaboration: 1) our robot is able to learn new objects and their location from user instructions involving gaze, and 2) it can instruct the user to move objects and passively track this movement by interpreting the user’s gaze.