Motivation

The usage of interactive public displays has increased including the number of sensitive applications and, hence, the demand for user authentication methods.

We implement a calibration-free authentication method for situated displays based on saccadic eye movements which tackles the drawbacks of gaze-based authentications: they tend to be slow and prone to errors.

We received an honorable mention for our work EyeLogin on gaze-based authentication at ETRA 2021 for this work. [read the paper]

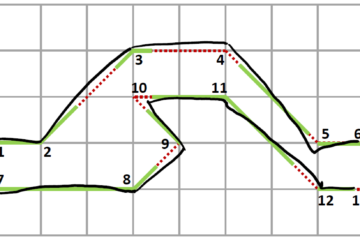

Figure 1: Interface of EyeLogin.

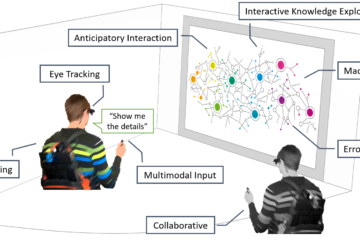

Figure 2: Interface of CueAuth [1].

Method

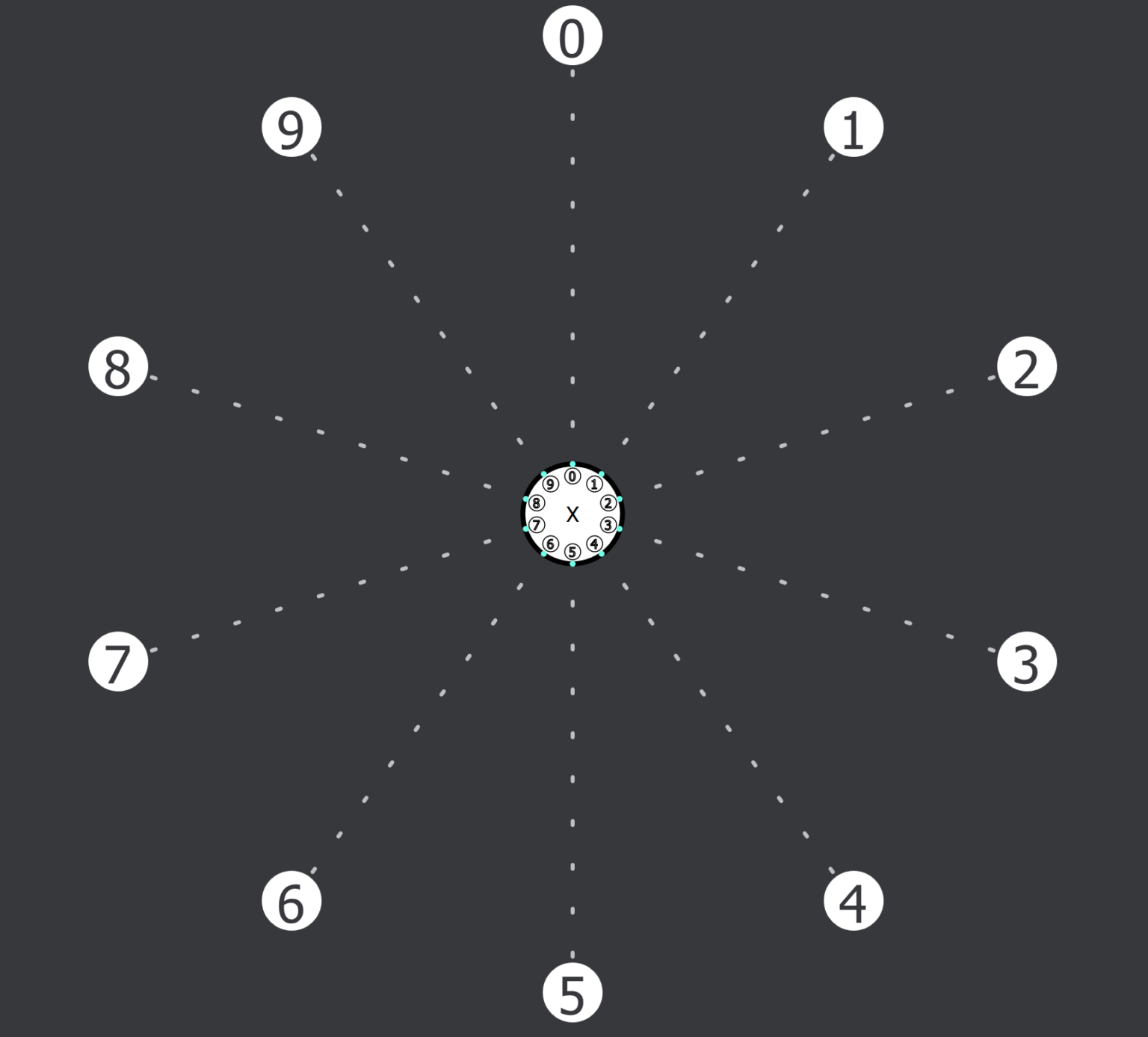

In our implementation, we leverage the quick nature of saccadic eye movements between fixations from the center to a digit in the interface (see Figure 1). Fixating a digit and returning to the center triggers a digit entry.

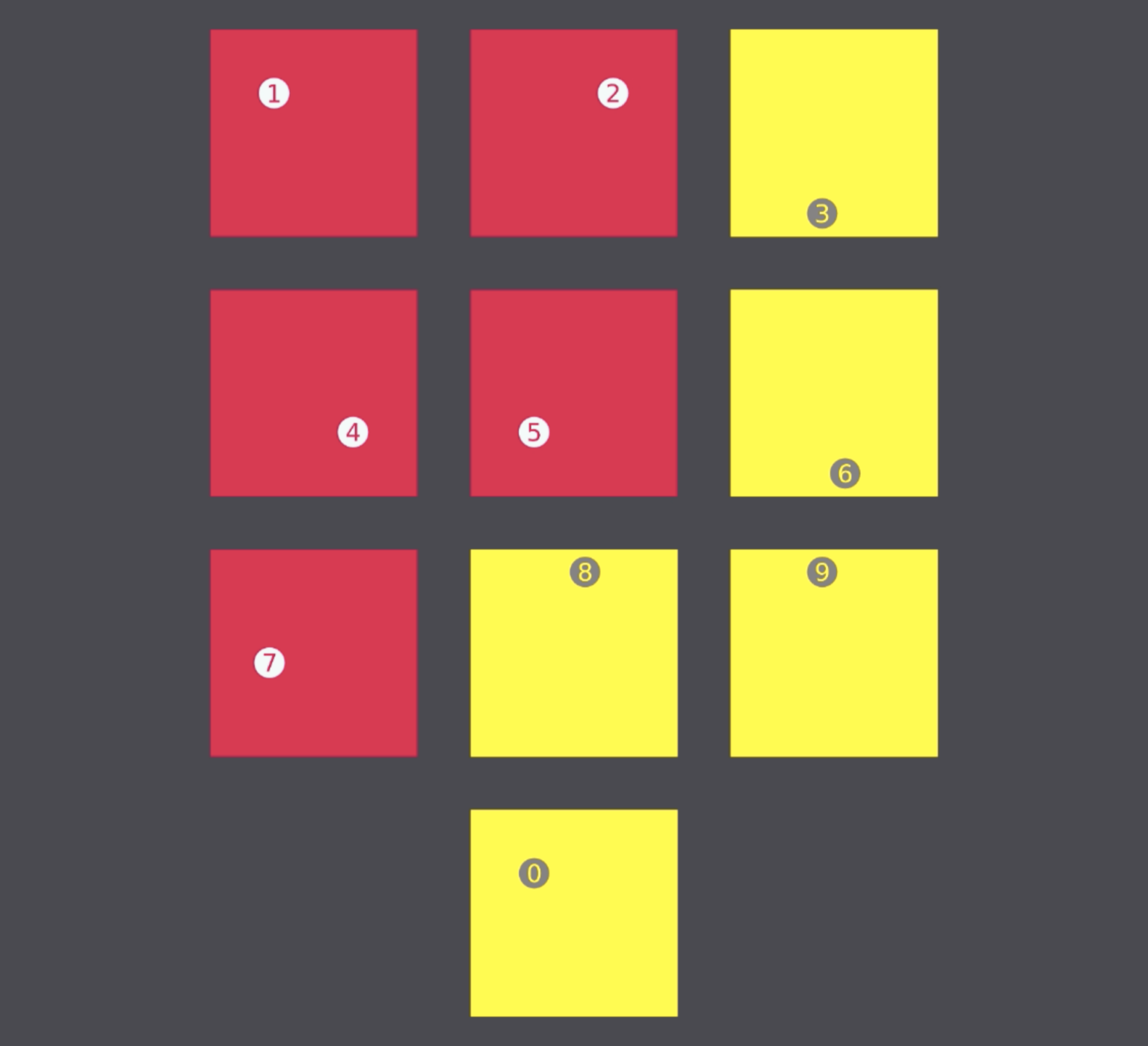

Additionally, we implement CueAuth [1], an authentication method based on smooth pursuit eye movements: a digit is entered by following a moving digit stimuli in the interface (See Figure 2).

Evaluation

In a user study (n=10), we compare our new method with CueAuth from Khamis et al. [1].

Our evaluation shows that our method EyeLogin outperforms the baseline CueAuth in terms of accuracy and entry time.

| Method | Accuracy | Entry time |

| EyeLogin | 95.88% | 5.12 sec |

| Baseline | 82.94% | 23.4 sec |

Table 1: Results from our user study (? = 10) including the average accuracy of. PIN entry and the average PIN entry time for EyeLogin and CueAuth.

References

Mohamed Khamis, Ludwig Trotter, Ville Mäkelä, Emanuel von Zezschwitz, Jens Le, Andreas Bulling, and Florian Alt. CueAuth: Comparing Touch, Mid-Air Gestures, and Gaze for Cue-based Authentication on Situated Displays. In: Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2, 4, Article 174 (December 2018), 22 pages.