Detecting animal sounds is an essential aspect of scientific research and conservation efforts. It provides valuable insights into biodiversity, species behavior, and ecosystem health. Monitoring of terrestrial and marine wildlife is a way to decrease biodiversity loss and improve species conservation. To achieve this goal passive acoustic monitoring (PAM) is often used as a non-invasive and cost-effective method that allows scientists to study the presence of various species through their vocalizations. By identifying species-specific sounds and monitoring their patterns, PAM helps in understanding species distribution, population trends, and behavior.

This information is critical for implementing effective conservation strategies. Current animal sound repositories like UNICAMP’s Fonoteca Neotropical Jacques Vielliard (FNJV) or Cornell University’s Macaulay Library provide rich and valuable resources of data to support scientific research. However, utilizing these collections for training supervised machine learning algorithms is difficult due to the fact that they are mostly weakly annotated with labels only on file level, instead of identifying time segments containing specific animal calls. Beside the sound of interest that corresponds to the label, these files contain multiple signals such as human speech and/or other co-occurring species. Weakly supervised learning with respect to Sound Event Detection has attracted a lot of interest in the recent years. Research in this area has also been accelerated by the DCASE challenges and the release of datasets such as AudioSet.

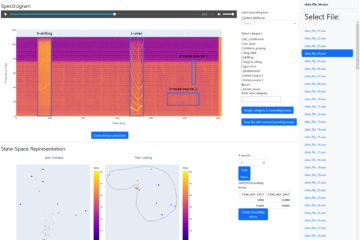

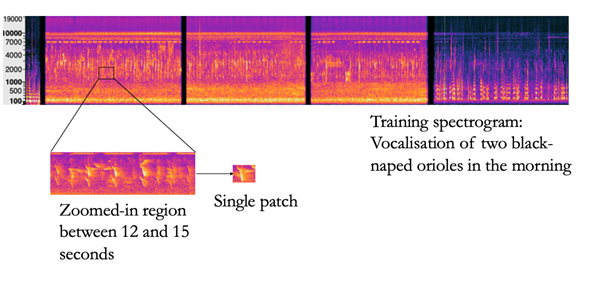

To address the challenge of weakly supervised sound event detection for PAM, our plan to is to extract relevant signals using interpretable models based on learning local patterns (prototypes) representative of the target classes. For this purpose, we designed a compact model based on ProtoPNet and trained for an initial evaluation on the MNIST dataset, achieving a validation accuracy of 95.9%. Next, we aim to preprocess the data from the FNJV collection by converting them into spectrograms and feeding them to our neural prototype model. We will then visualize the learned prototypes responsible for the predictions. To ensure a human-in-the-loop approach, an interactive interface will facilitate the triage of learned prototypes by an expert.

Through learning local patches in either the input or latent space, we anticipate successfully extracting species-identifying animal calls from the multi-signal files in the collections. This process will generate strong (timestamp level) annotations from initially weakly (file level) annotated data, making these datasets suitable for supervised learning methods. This is part of the project „Interactive Machine Learning Solutions for Acoustic Monitoring of Animal Wildlife in Biosphere Reserves” accepted at IJCAI 2023.

References

Chen, Chaofan, et al. “This looks like that: deep learning for interpretable image recognition.” Advances in neural information processing systems 32 (2019).