Visual Attention and the Artificial Episodic Memory

Recent advances in mobile eye tracking technologies opened the way to design novel attention-aware intelligent user interaction. In particular, the gaze signal can be used to create or improve artificial episodic memories for offline processing, but also real-time processing. We investigate different methods for modelling the user’s visual attention concerning different facets: When is the gaze signal relevant for the current interaction? What is in focus of the user at that time? How can this information be used for efficiently improving the utility of a user interaction system? How can the user interaction improve visual attention modelling?

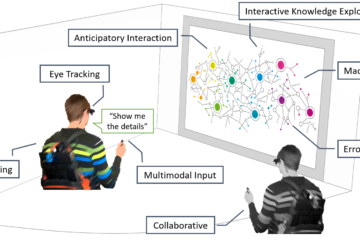

The classification of fixated objects can, for example, enable situated human-robot interaction; the identification of visual search targets can enhance anticipatory user support systems. Our goal is to develop interactive training mechanisms that immediately include the feedback of users in the learning process. In future, we aim at developing multimodal interaction techniques for more efficient training, also in augmented reality settings.

Gaze-guided Object Classification using Deep Neural Networks

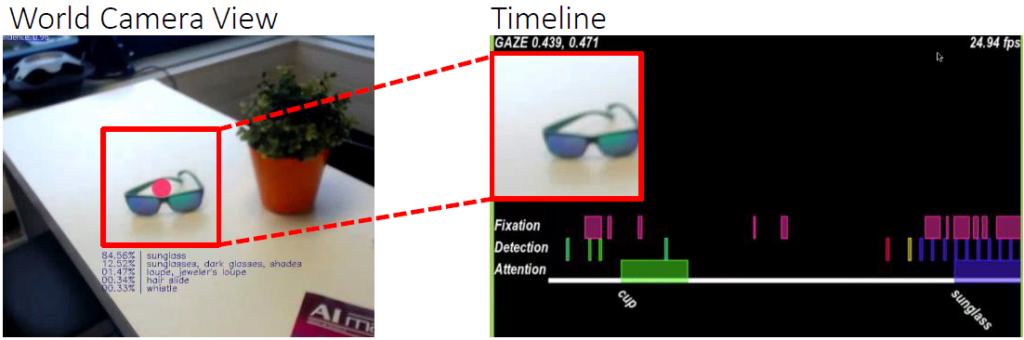

We presented a system at UbiComp 2016 that (1) incorporates the gaze signal and the egocentric camera of the eye tracker to identify the objects the user focuses at; (2) employs object classification based on deep learning which we recompiled for our purposes on a GPU-based image classification server; (3) detects whether the user actually draws attention to that object; and (4) combines these modules for constructing episodic memories of egocentric events in real-time [Barz and Sonntag, 2016].

References

- Gaze-guided Object Classification Using Deep Neural Networks for Attention-based Computing. In: Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing: Adjunct, pp. 253-256, ACM, 2016.