Cognitive assessments have been the subject of recent debate because there are limitations when they are conducted using pen and paper. For example, the collected material is monomodal (written form) and there is no direct digitalization for further and automatic processing. Here we show how such cognitive assessments can be fully digitalized in order to improve everyday practices in clinics and at home. As an example we present a fully automated, web-based system for execution and evaluation of the Mini-Mental State Examination (MMSE) based on a speech dialogue system and a smartpen, allowing us to evaluate cognitive impairment based on AI technologies.

Dementia is a general term for a decline in mental ability severe enough to interfere with daily life. The internationally used Mini-Mental State Examination is a 30-point questionnaire, which is extensively used in medicine and research to measure cognitive impairment. Depending on the experience of the physician and the cognitive state of the patient the administration of the test takes between 5 and 10 minutes and examines functions including awareness, attention, recall, language, ability to follow simple commands and orientation. Due to its standardisation, validity, short administration period and ease of use, it is widely used as a reliable screening tool for dementia.

We utilize inexpensive, off-the-shelf consumer hardware to capture speech and handwriting, allowing us to evaluate cognitive impairment automatically. The ease of use and the wide availability of the deployed hardware makes the system accessible to both clinicians and home users and can be used to gather large data sets. For the speech dialogue we employ a Google speech assistant in conjunction with a custom dialogue manager running on our server. To capture tasks involving handwriting we use a digital pen, which streams the user’s input to our server. Based on the traditional scoring method we adopted an automated version that is able to score user input automatically and in real-time, analyzing both, the spoken as well as the sketched parts of the MMSE.

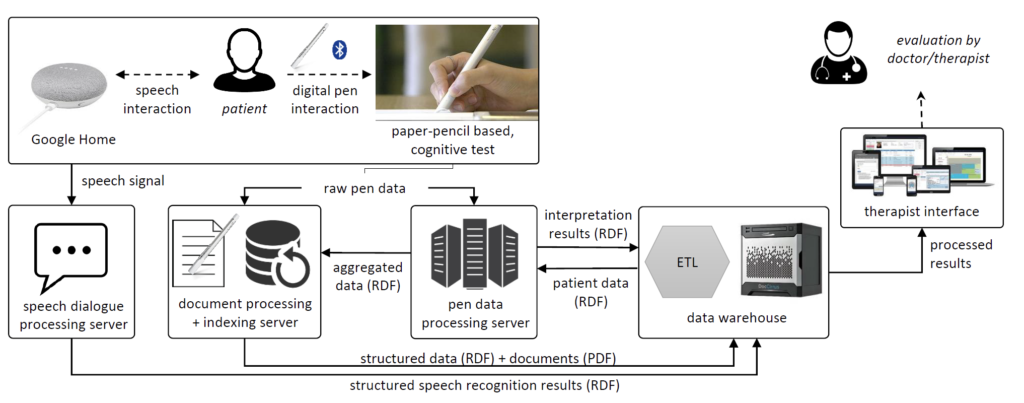

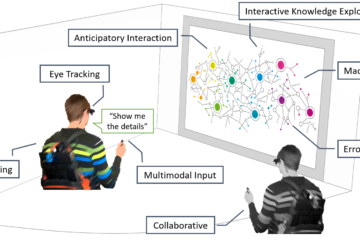

The technical architecture of our distributed, web-based infrastructure is shown above. As can be seen, at the computational level, there are two intelligent user interfaces, one for the patient (speech and digital pen interaction) and one for the caregiver (therapist interface). Raw pen data is sent to the document processing and indexing server. The pen data processing server provides aggregated pen events in terms of content-based interpretations in RDF (Resource Description Framework). The resulting documents are sent to the data warehouse, together with the RDF structured data. This structured data contains the recognised shapes and text, and text labels, for example. The system attempts to classify each pen stroke and stroke group in a drawing. Speech input from the patient is captured and send to the dialogue processing server, which provides structured speech recognition results.

Our second user interface is based on the data warehouse data, and is designed for the practicing clinician. This therapist interface, where the real-time interpretations of the speech dialogue and stroke data are available in RDF, is meant to advance existing neuropsychological testing technology. We display (1) summative statistics of assessment performances, (2) transcribed and scored speech input, (3) real-time test parameters of the sketch tests, and (4) real-time information about pen features such as tremor and in-air time of the digital pen.

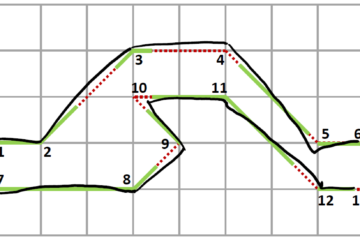

These visualisations are based on the set of over 100 signal-level features about the dynamic process of writing. Overall we devised a set of algorithms and classifiers that convert imprecise requirements from the original scoring system, such as “All 10 angles must be present and two must intersect.” to unbiased scores based on the raw handwriting input. Our caregiver interface also provides previously captured real-time data (e.g., for a slow-motion playback), and classifies the analyzed high-precision information about the filling process, opening up the possibility of detecting and visualising subtle cognitive impairments.

In addition, we take into account multiple sensor data provided by the smartpen, which currently cannot be measured easily by the human observer. Information about velocity, pressure, air-time and other features can bring vital insights about the patient’s cognitive state and handwriting behavior. We are currently extending our system to provide such feedback to therapists, based on more than one hundred distinct handwriting features.

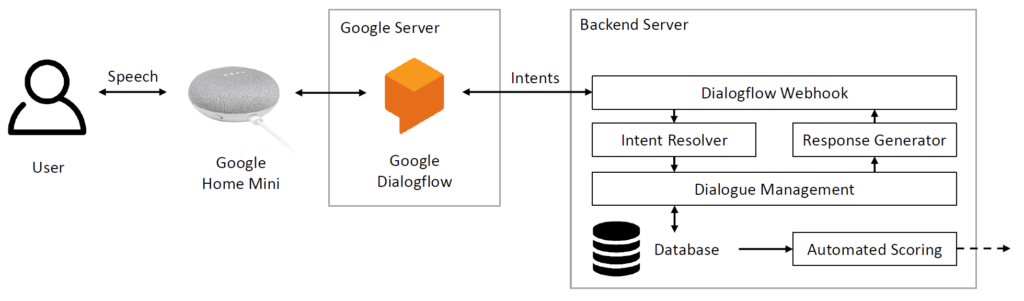

Our current setup consists of 3 main components: a speech dialogue hardware interface, a cloud-based speech recognition service and a backend server. In order to allow easy deployment and large scale usage we decided to use the Google Home Mini as a hardware device and frontend for the speech dialogue. It contains a speaker and a microphone and can connect to a wireless network. We deployed our custom application to the device using the Google Dialogflow framework, which receives input coming from the hardware device. Our custom backend service is registered inside our Google Dialogflow application, enabling us to manage the dialogue and directly evaluate the given answers in real-time.

References

- A categorisation and implementation of digital pen features for behaviour characterisation. In: Computing Research Repository eprint Journal, abs/1810.03970 , pp. 1-42, 2018.

- Multimodal Speech-based Dialogue for the Mini-Mental State Examination. In: Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems, pp. CS13:1-CS13:8, ACM, 2019.