Machine Learning

Comprehensive Evaluation of

Feature Attribution Methods in Explainable AI via Input Perturbation

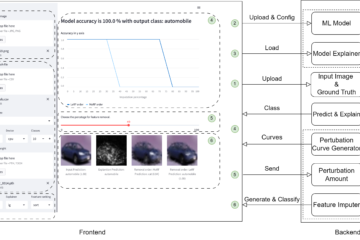

Explainable AI (XAI) has demonstrated its potential in deciphering discriminatory features in machine learning (ML) decision-making processes. Specifically, XAI’s feature attribution methods shed light on individual decisions made by ML models. However, despite their visual appeal, these attributions can be unfaithful. To ensure the faithfulness of feature attributions, it is Read more…