The research department IML extends its GPU infrastructure with a powerful DGX-A100 (640 GB) to accelerate end-to-end GPU workflows, transfer learning, and interactive machine learning. The system is hosted at DFKI’s large deep learning center in Kaiserslautern.

The new NVIDIA DGX-A100 (640 GB) is expected to significantly accelerate AI research into interactive machine learning systems and foster additional research in explainability (XAI) for practical use in industrial or medical applications. A new field of investigations for the IML group arises around the topic of sustainability. Given the shorter model update times, we should be able to adhere to usability rules of interactive systems well known to the international research community: 1.0 second is about the limit for the user’s flow of thought to stay uninterrupted, even though the user will notice the delay. 10 seconds is about the limit for keeping the user’s attention focused “on the dialogue.” One example is interactive image labelling.

“One goal is to work towards interactive deep learning,” says Prof. Daniel Sonntag, director of the research department IML. “The second goal concerns large-scale pre-trained or foundation models that can now be computed for various application domains, and better exploitation of parallel operations and pre-computation will be investigated, too.”

AI is witnessing the next wave of developments with the rise of large-scale pre-trained or foundation models such as BERT [1], GPT-3 [2], or DALL-E [3], which are trained on comprehensive data at scale and can be adapted to a wide range of various downstream tasks. These foundation models have led to an extraordinary level of homogenization. For instance, since 2019 almost all state-of-the-art models in Natural Language Processing are now adapted from one of a few foundation models, such as BERT [1], BART [4], T5 [5], etc., and eventually, this practice has become the norm. A similar trend also occurs across research communities ranging from images, speech, tabular data to biomedical data or reinforcement learning [6].

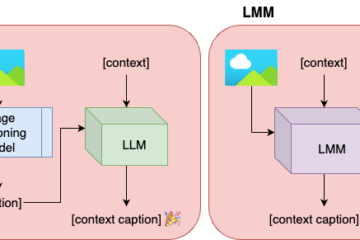

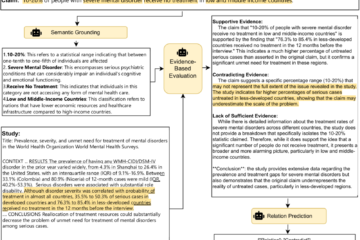

By harnessing the power of foundation models, the research community is optimistic about their social applicability [6], especially in the healthcare discipline with integrated human interaction. Especially, patient care via disease treatment usually requires expert knowledge that is limited and expensive. Foundation models trained on the abundance of data across many modalities (e.g., images, text, molecules) present clear opportunities to transfer knowledge learned from related domains to a specific domain and further improve efficiency in the adaptation step by reducing the cost of expert time. As a result, a fast prototype application can be employed without collecting significant amounts of data and training large models from scratch. In the opposite direction, end-users who will directly use or be influenced by these applications can provide feedback to power these foundation models toward creating tailored models for their desired goal (Interactive Machine Learning, IML) [7] [8] [9] [10].

However, obtaining such models at scale is a challenging task which depends on (i) the development of the model architectures, (ii) the availability of large-scale training data, (iii) and especially modern computer hardware systems with powerful GPUs. For instance, training GPT-3 with 175 billion parameters can take approximately 288 years with a single V100 NVIDIA GPU or even seven months with 512 V100 GPUs [11]. This obstacle poses a requirement for parallel techniques built on top of modern GPUs in terms of computation and memory ability. Through equipping a new DGX A100 server with 8X NVIDIA A100 GPUs inside and up to 640 GB total GPU memory, our IML department further strengthens the current DFKI deep learning infrastructure. In particular, this multi-GPU system can provide a massive amount of computing power with 1.5 PetaFLOPS, equips advanced technology for interlinking GPUs, and in addition enables massive parallelization across thousands of GPU cores.

Given these powerful computational resources, the Interactive Machine Learning department (IML) can conduct research for the healthcare sector for example, by developing innovative foundation models that can be adapted to different medical purposes. To further understand the working mechanism underlying these models and provide technical guidance in the algorithm designing, we will also investigate theoretical aspects related to transfer learning, learning multi-modal data, and especially improve robustness and interpretability capability of trained foundation models.

References

[1] Devlin et al. (2019). BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Association for Computational Linguistics (ACL) (pp. 4171–4186).

[2] Korngiebel, D. M. & Mooney, S. D. (2021). Considering the possibilities and pitfalls of Generative Pre-trained Transformer 3 (GPT-3) in healthcare delivery. NPJ Digital Medicine 4, 93.

[3] Ramesh et al. (2021). Zero-Shot Text-to-Image Generation. Proceedings of the 38th International Conference on Machine Learning. In Proceedings of Machine Learning Research 139 (pp. 8821-8831).

[4] Lewis et al. (2020). BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and Comprehension. In Association for Computational Linguistics (ACL) (pp. 7871-7880).

[5] Raffel et al. (2020). Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer. In Journal of Machine Learning Research (JMLR), 21(140), 1-67.

[6] Bommasani et al. (2021). On the Opportunities and Risks of Foundation Models. arXiv preprint arXiv:2108.07258.

[7] Zacharias, J., Barz, M., & Sonntag, D. (2018). A survey on deep learning toolkits and libraries for intelligent user interfaces. Computing Research Repository eprint Journal (CoRR).

[8] Nunnari, F. & Sonntag, D. (2021). A software toolbox for deploying deep learning decision support systems with XAI capabilities. In Companion of the 2021 ACM SIGCHI Symposium on Engineering Interactive Computing Systems (pp. 44-49).

[9] Nguyen et al. (2020). A visually explainable learning system for skin lesion detection using multiscale input with attention U-Net. In German Conference on Artificial Intelligence (Künstliche Intelligenz) (pp. 313-319).

[10] Sonntag, D., Nunnari, F., & Profitlich, H. J. (2020). The Skincare project, an interactive deep learning system for differential diagnosis of malignant skin lesions. DFKI Research Reports (RR).

[11] Narayanan et al. (2021). Efficient large-scale language model training on GPU clusters. arXiv preprint arXiv:2104.04473.