Duy Nguyen, from the Interactive Machine Learning department, and colleagues from the University of Stuttgart, Oldenburg University, University of California San Diego, Stanford University, and other institutions presented a full paper on accelerating transformer models at NeurIPS 2024.

NeurIPS is considered one of the premier global conferences in the field of machine learning. The conference took place from December 10 to December 15, 2024, at the Vancouver Convention Center. This year, NeurIPS received a record-breaking 15,671 paper submissions, of which 4,037 were accepted, resulting in an acceptance rate of approximately 25.76%.

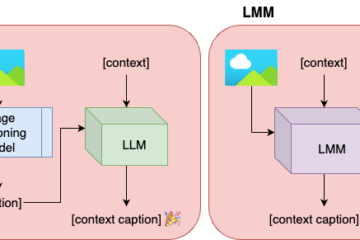

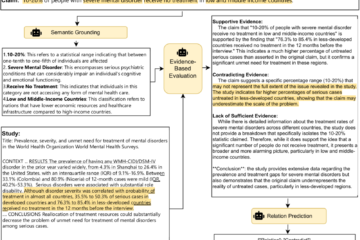

The accepted work, namely PiToMe, introduces a ??? ?????-??????? ????????? ??? ???????????? ???????????-????? ?????? ???? ???????? ????????????. We showed that ?????? ????? ???? ???? ??-??% ????? of the ???? ?????? ????? ?????????? ???????? ???-???-????? ??????????? (??????? ??? ???????? ?????) on ????? ?????????????? (0.5% average performance drop of ViT-MAE-H compared to 2.6% as baselines), ?????–???? ????????? (0.3% average performance drop of CLIP on Flickr30k compared to 4.5% as others), and analogously in ?????? ????????? ????????? ???? ?????–7? ?? ?????-13?.

Unlike previous approaches that employ Bipartite Soft Matching (BSM) with randomly partitioned token sets to identify the top-k similar tokens—often leading to sensitivity to token-splitting strategies – ?????? ?????????? the ???????????? ?? ??????????? ?????? by introducing a new term called ?????? ?????, ???????? ?? ???????? ????? ??????. This approach ???? ?? ???? ?? ????????? ?????????? ??? ??? ? ????????????? ???????? ????????? that essential information will be retained.

As with previous editions, NeurIPS 2024 offers a rich and diverse program featuring several invited speakers, 4,037 accepted posters, 14 tutorials, and 56 workshops. Noteworthy workshops include Responsibly Building the Next Generation of Multimodal Foundation Models, GenAI for Health: Potential, Trust, and Policy Compliance, and Fine-Tuning in Modern Machine Learning: Principles and Scalability. These workshops highlight critical advancements and challenges in interpretable machine learning (IML) and related fields. Additionally, our department has further strengthened collaborations with leading biomedical research groups at Stanford University, fostering innovation at the intersection of AI and healthcare.

The presented work at NeurIPS 2024