In December 2025, our IML member – Duy Nguyen, conducted a series of invited visits and technical discussions with leading academic and national research institutions in the United States. These visits focused on advancing foundational AI research and establishing long-term collaborations spanning robotics, AI for science, and healthcare AI.

Stanford University – Vision-Language-Action Models for Robotics

Stanford University, front entrance, Quad, Memorial Church.

Credit: Linda A. Cicero / Stanford News Service

During his visit to Stanford, Duy engaged with researchers at the Stanford Robotics Center to discuss the future of Vision-Language-Action (VLA) models with a strong emphasis on robotic surgery applications.

Key discussion topics included:

- Safe deployment of VLA models in high-stakes robotic systems

- Imitation learning from expert demonstrations

- Robust grounding between visual perception, language instructions, and motor actions

- Reliability and verification challenges in surgical robotics

These discussions resulted in a clear roadmap for next-stage collaborative projects centered on safe, interpretable, and scalable VLA systems for real-world robotic deployment.

Lawrence Livermore National Laboratory

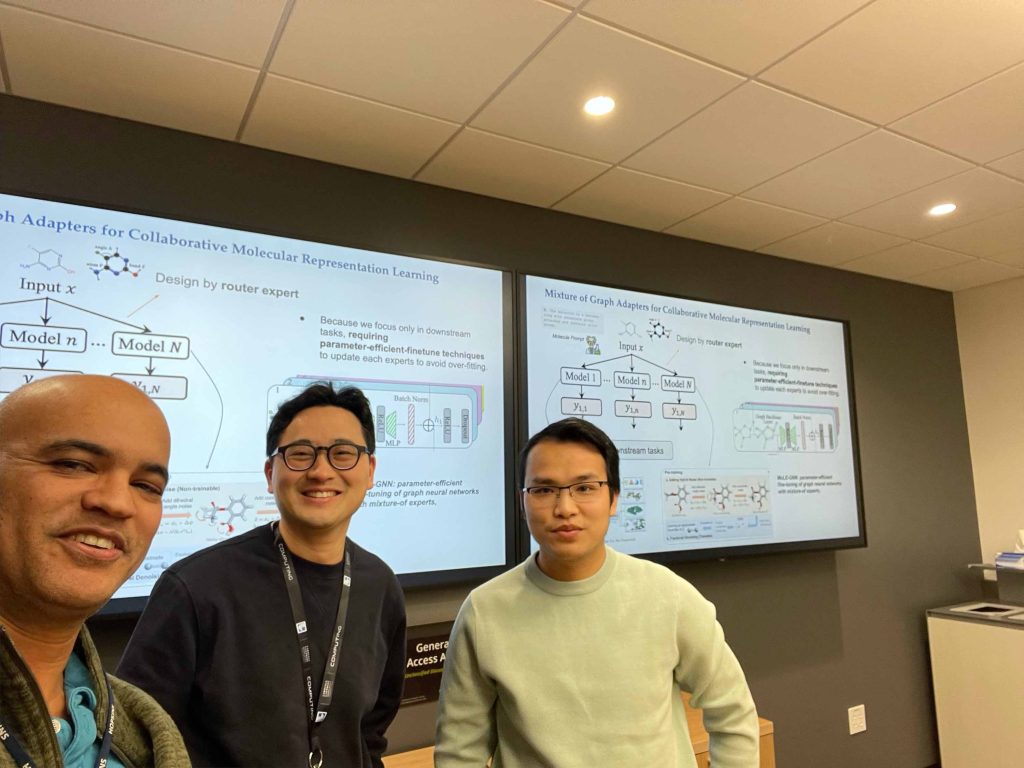

At Lawrence Livermore National Laboratory, Duy successfully wrapped up the ongoing collaboration on scaling 3D conformer learning, with applications in AI for Science [1].

Building on this foundation, new research directions were proposed to extend 3D molecular learning toward mixture-of-agents systems, in which each agent functions as a specialized foundation model, while a lightweight LLM coordinates communication, planning, and user interaction among agents, with the overarching goal of investigating this paradigm for drug discovery enhanced by multi-modal inputs including 3D molecular structures, textual knowledge, and experimental data. This work opens a promising path toward agentic AI systems for scientific discovery.

[1] Nguyen, Duy Minh Ho, et al. “From Fragments to Geometry: A Unified Graph Transformer for Molecular Representation from Conformer Ensembles.” ICML 2025 Generative AI and Biology (GenBio) Workshop.

Universitiy of Pennsylvania – Multi Modal LLMs for Healthcare

University of Pennsylvania: Department of Biostatistics, Epidemiology, and Informatics (UPenn Medicine)

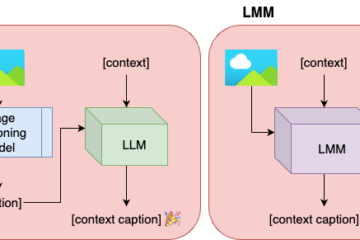

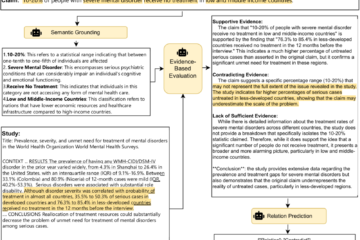

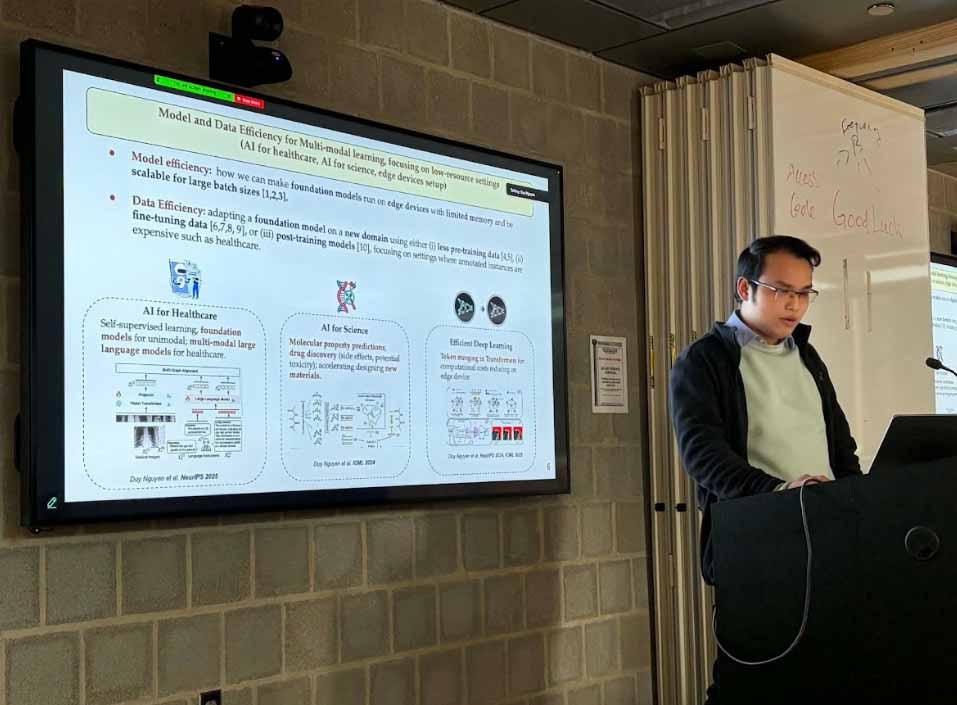

At UPenn Medicine, Duy presented his recent research on multi-modal large language models for healthcare to a group of Prof. Li Shen, focusing on medical image-text-reasoning alignment [2,3,4]

He further proposed a new collaborative project centered on self-verification structured visual Chain-of-Thought (CoT) for medical applications, aiming to significantly (i) improve the reliability of LLM outputs in healthcare by making the reasoning process more interpretable and transparent, (ii) strengthening grounding fidelity between medical images and clinical text, and (iii) increasing robustness against hallucinations and reasoning errors through explicit, structured verification mechanisms.

The team plans to launch this project using a dedicated medical dataset available at UPenn Medicine, marking a concrete step toward clinically reliable AI systems.

[2] MH Nguyen, Duy, et al. “Lvm-med: Learning large-scale self-supervised vision models for medical imaging via second-order graph matching.” Advances in Neural Information Processing Systems 36 (2023): 27922-27950.

[3] Nguyen, Duy Minh Ho, et al. “Exgra-med: Extended context graph alignment for medical vision-language models.” The Thirty-ninth Annual Conference on Neural Information Processing Systems. 2025.

[4] Le, Huy M., et al. “Reinforcing Trustworthiness in Multimodal Emotional Support Systems.” The 40th Annual AAAI Conference on Artificial Intelligence 2026.

Duy Nguyen presenting his current research and direction

Duy Nguyen with Prof. Li Sen, head of the department.

Looking ahead

We sincerely thank our partners at Stanford University, Lawrence Livermore National Laboratory, and the University of Pennsylvania for hosting these visits and for the stimulating technical discussions. We look forward to deepening these collaborations and delivering impactful joint research outcomes in 2026 and beyond.