This year’s NeurIPS conference in San Diego, USA was a major triumph for IML as they had 4 contributions accepted, inclusive of three full papers and one workshop paper.

Three members of the Intelligent Machine Learning (IML) department at DFKI, Duy Nguyen, Tuan Tran, and Md Abdul Kadir, proudly represented our team at NeurIPS 2025, held this year in San Diego, USA. NeurIPS remains one of the world’s most prestigious conferences in machine learning, widely known for its highly selective review process and rigorous scientific standards. With an acceptance rate of approximately 25% in 2025, having multiple papers accepted is a remarkable achievement that highlights the department’s growing research impact.

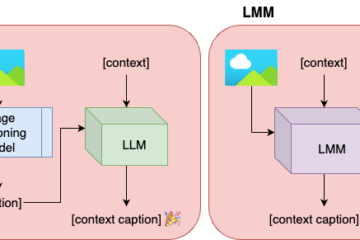

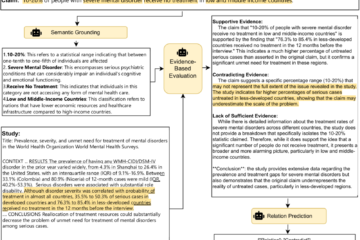

The first accepted paper, ExGra-Med [1], presents a new multi-graph alignment framework that overcomes the weak vision–language grounding often seen in current medical MLLMs like LLaVA-Med and BioMedGPT. Instead of relying on massive autoregressive training and costly instruction-following data, ExGra-Med jointly aligns images, responses, and extended captions in a unified latent space, significantly strengthening semantic coherence. The team further introduces an efficient black-box gradient training scheme, enabling scalable optimization for large LLMs such as LLaMA-7B. Remarkably, ExGra-Med matches LLaVA-Med’s performance with just 10% of the data, achieves a 20.13% gain on VQA-RAD, and surpasses strong baselines including BioMedGPT and RadFM, highlighting its promise for efficient, high-quality medical vision–language integration.

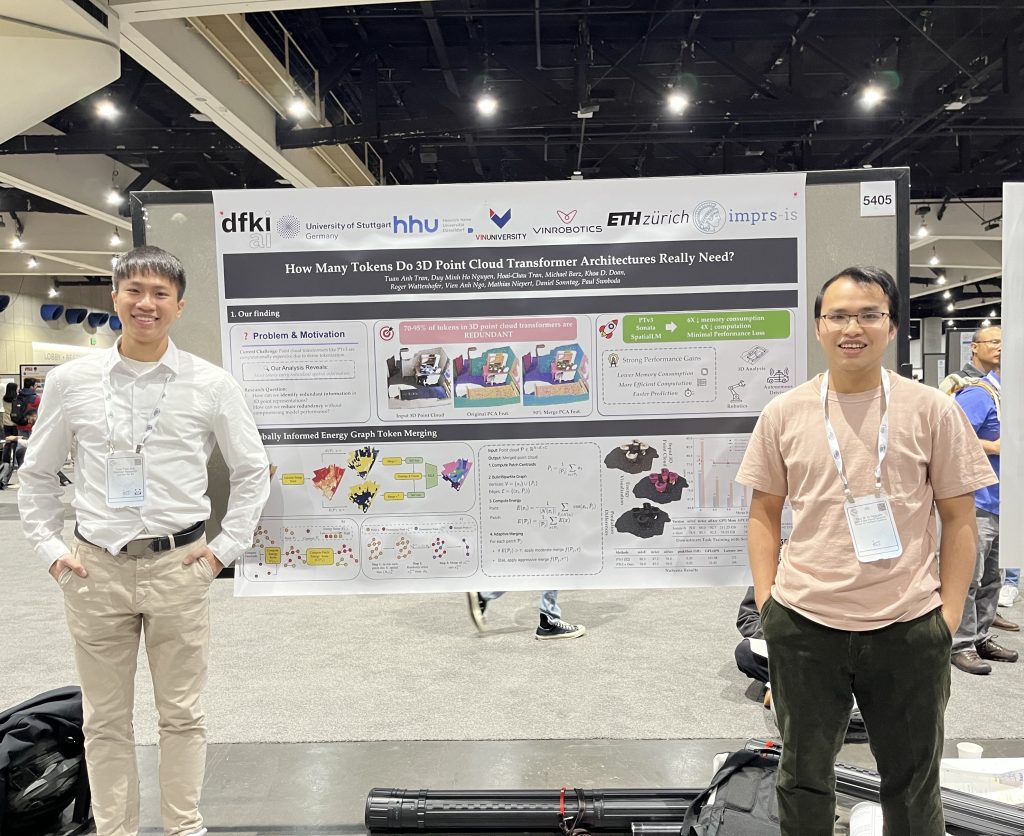

The second contribution focuses on token merging for 3D point cloud transformer models [2], introducing a novel family of methods that drastically reduce computational cost without sacrificing accuracy. Presented through the website gitmerge3d.github.io, this work demonstrates that current state-of-the-art 3D transformers are heavily over-tokenized. With an effective token merging strategy, up to 95% of tokens can be removed during inference and training while preserving segmentation, reconstruction, and detection performance across multiple benchmarks. The approach brings substantial efficiency gains and opens new possibilities for scalable 3D perception models.

The third main conference paper introduces IS-DAAs [3], a novel importance-sampling approach designed to address the over-optimization problem in Direct Alignment Algorithms (DAAs) such as Direct Preference Optimization (DPO), a common alternative to RLHF for aligning large language models. DAAs often suffer from model drift, where continued training pushes the policy too far from the reference model, degrading performance. IS-DAAs counter this by weighting the DAA objective with an importance ratio that captures the reference policy distribution, while applying clipping to prevent high-variance updates. Extensive experiments show that IS-DAAs effectively mitigate over-optimization, particularly under low regularization, and outperform existing methods aimed at stabilizing preference-based alignment.

In addition to the main-track papers, the team also presented a workshop paper titled AuditCopilot: Leveraging LLMs for Fraud Detection in Double-Entry Bookkeeping [4]. This work explores how modern LLMs can support auditors by automatically detecting anomalies, inconsistencies, and potential fraud patterns in complex financial records. By integrating domain-specific reasoning strategies with structured accounting signals, AuditCopilot shows promising results in reducing manual workload and enhancing the accuracy of financial audits.

As in previous years, NeurIPS 2025 offered a rich program featuring invited talks, hundreds of posters, cutting-edge tutorials, and a diverse set of workshops across machine learning, optimization, generative modeling, and AI safety. Highlights this year included workshops on Multi-modal Foundation Models and Large Language Models for Life Sciences, New Perspectives in Graph Machine Learning, and GenAI for Health: Potential, Trust, and Policy Compliance, all highly relevant to ongoing research in the IML department. Beyond the scientific program, our team engaged with leading groups in AI, robotics, and biomedical research at the National University of Singapore, Stanford University, strengthening ongoing collaborations and laying the foundation for new joint projects in 2026 and beyond.

We thank our partners from National University of Singapore (NUS), Stanford University, and ETH Zürich.

NeurIPS 2025 in San Diego, USA

Tuan Tran, Duy Nguyen, and Md Abdul Kadir (from left to right) from IML at the NeurIPS conference

Tuan Tran and Duy Nguyen present their work at the conference

References

[1] Duy M. H. Nguyen, Nghiem Diep, Trung Nguyen, Hoang-Bao Le, Tai Nguyen, Tien Nguyen, Tin T. Nguyen, Nhat Ho, Pengtao Xie, Roger Wattenhofer, James Zhou, Daniel Sonntag, Mathias Niepert. ExGra-Med: Extended Context Graph Alignment for Medical Vision-Language Models. Advances in Neural Information Processing Systems (NeurIPS) 2025.

[2] Tuan Anh Tran, Duy M. H Nguyen*, Hoai-Chau Tran, Michael Barz, Khoa D Doan, and Roger Wattenhofer, Vien Anh Ngo, Mathias Niepert, Daniel Sonntag, Paul Swoboda. How Many Tokens Do 3D Point Cloud Transformer Architectures Really Need? Advances in Neural Information Processing Systems (NeurIPS) 2025.

[3] Phuc Minh Nguyen, Ngoc-Hieu Nguyen, Duy M.H Nguyen, Anji Liu, An Mai, and Binh T. Nguyen, Daniel Sonntag, Khoa D. Doan. Mitigating Reward Over-optimization in Direct Alignment Algorithms with Importance Sampling. Advances in Neural Information Processing Systems (NeurIPS) 2025.

[4] Kadir, Md Abdul, Sai Suresh Macharla Vasu, Sidharth S. Nair, and Daniel Sonntag. AuditCopilot: Leveraging LLMs for Fraud Detection in Double-Entry Bookkeeping. Generative AI in Finance workshop at NeurIPS 2025.