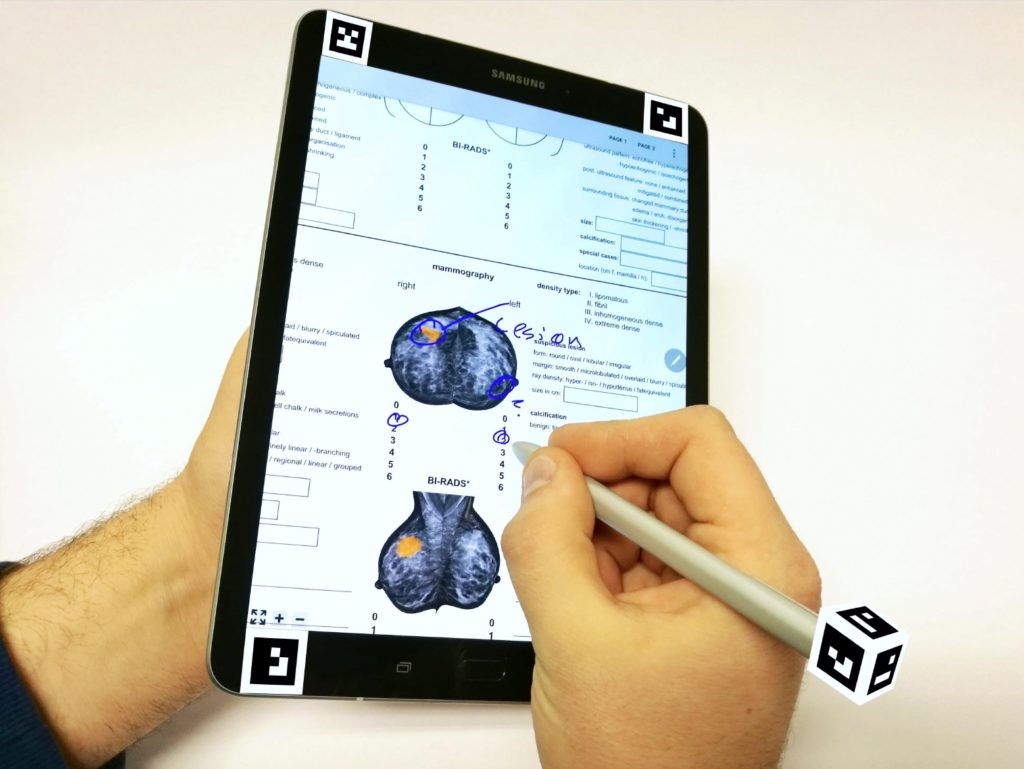

Recently there has been a number of research questions regarding user interaction in virtual reality, but only a few are concerned with the interaction using real world objects. For complex data entry we use a tablet with integrated stylus, that is represented in virtual reality through real-time tracking of markers. This way data entry tasks and model refinement for machine learning tasks can be done easily in the virtual space.

The OculuCam system is based on low-cost webcam hardware (Logitech c930) attached to the Oculus Rift, using a 3D printed, leightweight magnetic mounting mechanism. Through the precise linking between camera and headset the OculuCam system can correctly deduce the position of the camera in relation to the virtual space and render it in-place in real-time.

The OculuCam system is based on low-cost webcam hardware (Logitech c930) attached to the Oculus Rift, using a 3D printed, leightweight magnetic mounting mechanism. Through the precise linking between camera and headset the OculuCam system can correctly deduce the position of the camera in relation to the virtual space and render it in-place in real-time.

In order to track real world objects like the tablet or pen we employ ArUco markers, which can be recognized by the OculuCam. User input on the tablet is captured and analyzed in real-time, the results are exported into the Virtual Reality application, where the data is used to interactively refine the underlying machine learning model, e.g., for Medical Decision Support or Facetted Search applications.